Beyond the Code: Fortifying AI-Driven Environments Against Cyber Threats and Instability

Artificial Intelligence (AI) is now the backbone of modern digital marketing — powering intelligent agents, automating workflows, analyzing data at scale, and helping businesses reach new levels of efficiency. But as AI systems grow more advanced, they also become more exposed to cybersecurity risks and operational instability.

Recent global incidents have shown us that even the most trusted tools can fail — or worse, be compromised. For marketers, developers, and businesses leveraging AI-powered systems, cybersecurity and stability are no longer optional; they are mission-critical.

This article explores real-world incidents, their lessons, and actionable steps to strengthen your AI-driven environment.

Table of Contents

- The Dual Edge of AI: Power and Vulnerability

- Real-World Incidents That Changed the Game

- How to Strengthen Your AI Environment

- Why Cybersecurity Must Be Human-Centered

- Conclusion

- Frequently Asked Questions (FAQs)

The Dual Edge of AI: Power and Vulnerability

AI delivers automation, predictive insights, campaign optimization, and smarter decision-making. But these systems rely on:

- complex algorithms

- massive datasets

- interconnected cloud infrastructure

- third-party tools and open-source libraries

This interconnected nature creates a larger attack surface. A single vulnerability — whether in a library, update, or integration — can compromise:

- AI model accuracy

- data integrity

- system availability

- user trust and compliance

When an AI-driven system breaks, everything connected to it breaks too — campaigns, automation, reporting, and revenue.

Lessons from the Front Lines: Real-World Incidents

1. The XZ Utils Backdoor: A Chilling Supply Chain Attack (March 2024)

The Incident: Attackers inserted a covert backdoor into xz (liblzma), a common compression library used in Linux systems. The tampered library allowed unauthorized remote access to affected SSH servers.

The AI Connection: AI systems rely heavily on open-source components. A compromised library like XZ could:

- give attackers remote access to servers hosting AI models

- expose sensitive training data

- manipulate intelligent agent decision-making

- steal proprietary algorithms or marketing logic

The Lesson: Supply chain security is essential. AI environments must verify every dependency — even trusted open-source tools.

2. The CrowdStrike Update Incident: When Stability Falters (July 2024)

The Incident: A faulty CrowdStrike Falcon update triggered global Windows bluescreens and boot failures. Businesses experienced downtime, lost productivity, and disrupted operations — all from a legitimate security update.

The AI Connection: AI systems require a stable operating environment. A single unstable update can:

- stop active AI-driven campaigns

- interrupt data pipelines

- corrupt datasets

- halt automation and intelligent agent workflows

The Lesson: Redundancy, isolated testing environments, and rollback plans must be mandatory for any AI-powered infrastructure.

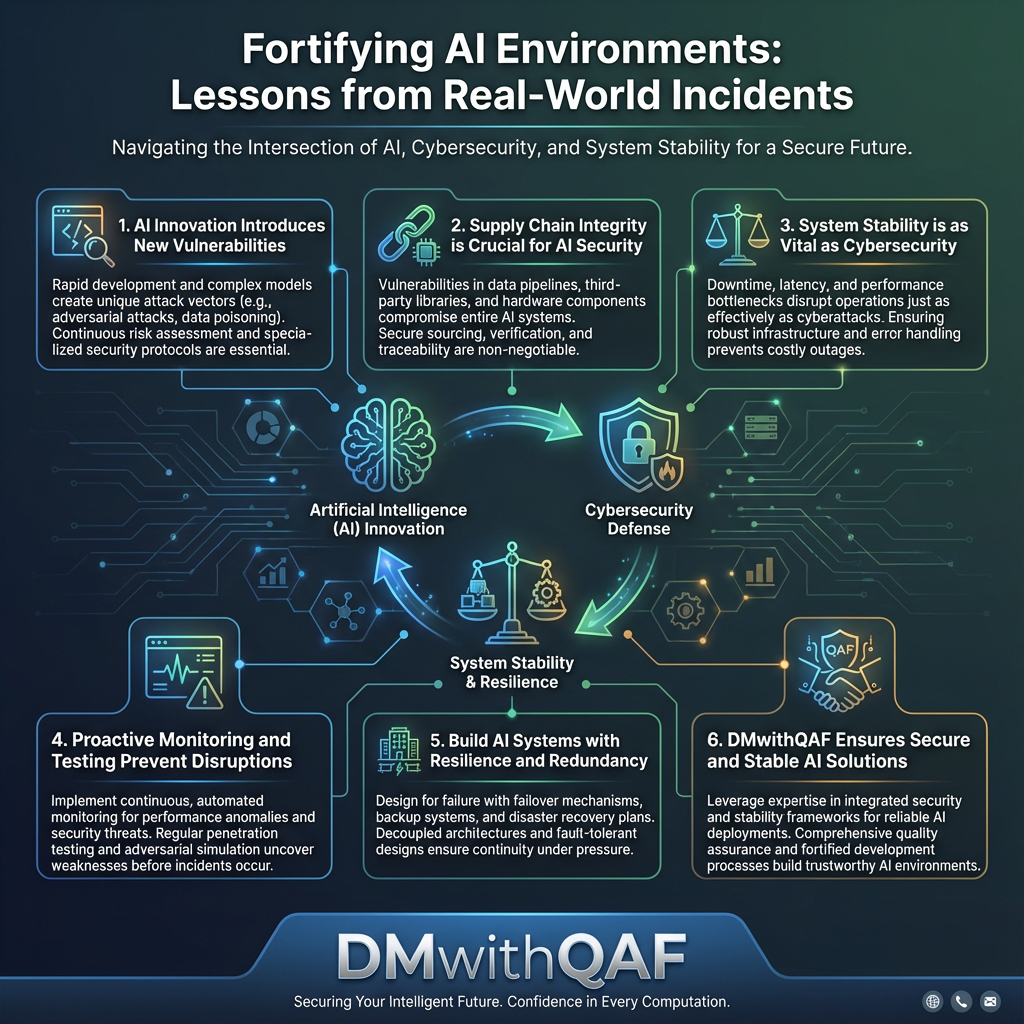

Strengthening Your AI-Driven Environment: A DMwithQAF Perspective

At DMwithQAF, every AI solution we design is built with stability and security at the core. Here are the principles that guide our approach:

- Security-by-Design: Secure code practices, hardened servers, and safe deployment pipelines.

- Proactive Threat Monitoring: Continuous tracking of vulnerabilities and unusual system behavior.

- Resilient Architectures: Redundancy, backup systems, and isolated failover environments.

- Data Integrity & Privacy: Encryption, access control, and compliance with regulations such as GDPR.

- Vendor & Tool Verification: Strict evaluation of all third-party integrations and libraries.

- Incident Response Planning: Clear steps for reducing damage and restoring system functionality fast.

These practices help ensure that AI enables growth — without exposing businesses to unnecessary risk.

Humanizing Cybersecurity for AI Success

Cybersecurity is not just technical; it’s deeply human.

Every breach impacts:

- customer trust

- brand reputation

- marketing performance

- your team’s confidence in the tools they use

A secure and stable AI system empowers your marketers, strategists, and analysts to focus on creativity and results — not fear of failure or compromise.

Conclusion

AI-driven digital marketing offers incredible opportunities, but it also requires proactive defense, stability planning, and strategic foresight. By learning from major real-world incidents and strengthening critical infrastructures, businesses can confidently embrace AI as a growth engine.

Want to build a safer, more reliable AI-powered ecosystem? Reach out:

Email: info@dmwithqaf.com

Website: www.digitalmarketingwithqaf.com

Frequently Asked Questions (FAQs)

1. Why is cybersecurity more important in AI-driven environments?

AI systems process large volumes of sensitive data and rely on interconnected tools, making them high-value targets for attackers.

2. What is the biggest risk to AI system stability?

Unexpected system updates, faulty patches, or single points of failure can instantly break AI workflows.

3. How can businesses protect their AI infrastructures?

Through secure development practices, supply chain verification, redundancy, and continuous monitoring.

4. Can compromised AI models affect marketing performance?

Yes — corrupted or manipulated models can lead to inaccurate insights, wasteful ad spend, and incorrect automation actions.

5. What role does DMwithQAF play in AI security?

We help businesses build secure, stable, and reliable AI-powered marketing ecosystems with both technical and strategic safeguards.